W

W3D reconstruction from multiple images is the creation of three-dimensional models from a set of images. It is the reverse process of obtaining 2D images from 3D scenes.

W

WA 3D selfie is a 3D-printed scale replica of a person or their face. These three-dimensional selfies are also known as 3D portraits, 3D figurines, 3D-printed figurines, mini-me figurines and miniature statues. In 2014 a first 3D printed bust of a President, Barack Obama, was made. 3D-digital-imaging specialists used handheld 3D scanners to create an accurate representation of the President.

W

WAugmented reality (AR) is an interactive experience of a real-world environment where the objects that reside in the real world are enhanced by computer-generated perceptual information, sometimes across multiple sensory modalities, including visual, auditory, haptic, somatosensory and olfactory. AR can be defined as a system that fulfills three basic features: a combination of real and virtual worlds, real-time interaction, and accurate 3D registration of virtual and real objects. The overlaid sensory information can be constructive, or destructive. This experience is seamlessly interwoven with the physical world such that it is perceived as an immersive aspect of the real environment. In this way, augmented reality alters one's ongoing perception of a real-world environment, whereas virtual reality completely replaces the user's real-world environment with a simulated one. Augmented reality is related to two largely synonymous terms: mixed reality and computer-mediated reality.

W

WAutomatic number-plate recognition is a technology that uses optical character recognition on images to read vehicle registration plates to create vehicle location data. It can use existing closed-circuit television, road-rule enforcement cameras, or cameras specifically designed for the task. ANPR is used by police forces around the world for law enforcement purposes, including to check if a vehicle is registered or licensed. It is also used for electronic toll collection on pay-per-use roads and as a method of cataloguing the movements of traffic, for example by highways agencies.

W

WA checkweigher is an automatic or manual machine for checking the weight of packaged commodities. It is normally found at the offgoing end of a production process and is used to ensure that the weight of a pack of the commodity is within specified limits. Any packs that are outside the tolerance are taken out of line automatically.

W

WA Circular review system is a system on board some armoured combat vehicles or tanks which provides the crew greater situational awareness outside of the vehicle.

W

WClosed-circuit television (CCTV), also known as video surveillance, is the use of video cameras to transmit a signal to a specific place, on a limited set of monitors. It differs from broadcast television in that the signal is not openly transmitted, though it may employ point-to-point (P2P), point-to-multipoint (P2MP), or mesh wired or wireless links. Though almost all video cameras fit this definition, the term is most often applied to those used for surveillance in areas that require additional security or ongoing monitoring. Though videotelephony is seldom called "CCTV" one exception is the use of video in distance education, where it is an important tool.

W

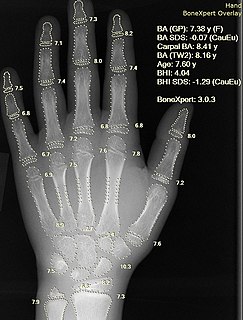

WComputer-aided detection (CADe), also called computer-aided diagnosis (CADx), are systems that assist doctors in the interpretation of medical images. Imaging techniques in X-ray, MRI, and ultrasound diagnostics yield a great deal of information that the radiologist or other medical professional has to analyze and evaluate comprehensively in a short time. CAD systems process digital images for typical appearances and to highlight conspicuous sections, such as possible diseases, in order to offer input to support a decision taken by the professional.

W

WContent-based image retrieval, also known as query by image content (QBIC) and content-based visual information retrieval (CBVIR), is the application of computer vision techniques to the image retrieval problem, that is, the problem of searching for digital images in large databases. Content-based image retrieval is opposed to traditional concept-based approaches.

W

WDeepfakes are synthetic media in which a person in an existing image or video is replaced with someone else's likeness. While the act of faking content is not new, deepfakes leverage powerful techniques from machine learning and artificial intelligence to manipulate or generate visual and audio content with a high potential to deceive. The main machine learning methods used to create deepfakes are based on deep learning and involve training generative neural network architectures, such as autoencoders or generative adversarial networks (GANs).

W

WEye tracking is the process of measuring either the point of gaze or the motion of an eye relative to the head. An eye tracker is a device for measuring eye positions and eye movement. Eye trackers are used in research on the visual system, in psychology, in psycholinguistics, marketing, as an input device for human-computer interaction, and in product design. Eye trackers are also being increasingly used for rehabilitative and assistive applications . There are a number of methods for measuring eye movement. The most popular variant uses video images from which the eye position is extracted. Other methods use search coils or are based on the electrooculogram.

W

WA fingerprint is an impression left by the friction ridges of a human finger. The recovery of partial fingerprints from a crime scene is an important method of forensic science. Moisture and grease on a finger result in fingerprints on surfaces such as glass or metal. Deliberate impressions of entire fingerprints can be obtained by ink or other substances transferred from the peaks of friction ridges on the skin to a smooth surface such as paper. Fingerprint records normally contain impressions from the pad on the last joint of fingers and thumbs, though fingerprint cards also typically record portions of lower joint areas of the fingers.

W

WGesture recognition is a topic in computer science and language technology with the goal of interpreting human gestures via mathematical algorithms. Gestures can originate from any bodily motion or state but commonly originate from the face or hand. Current focuses in the field include emotion recognition from face and hand gesture recognition. Users can use simple gestures to control or interact with devices without physically touching them. Many approaches have been made using cameras and computer vision algorithms to interpret sign language. However, the identification and recognition of posture, gait, proxemics, and human behaviors is also the subject of gesture recognition techniques. Gesture recognition can be seen as a way for computers to begin to understand human body language, thus building a richer bridge between machines and humans than primitive text user interfaces or even GUIs, which still limit the majority of input to keyboard and mouse and interact naturally without any mechanical devices. Using the concept of gesture recognition, it is possible to point a finger at this point will move accordingly. This could make conventional input on devices such and even redundant.

W

WHuman image synthesis is technology that can be applied to make believable and even photorealistic renditions of human-likenesses, moving or still. It has effectively existed since the early 2000s. Many films using computer generated imagery have featured synthetic images of human-like characters digitally composited onto the real or other simulated film material. Towards the end of the 2010s deep learning artificial intelligence has been applied to synthesize images and video that look like humans, without need for human assistance, once the training phase has been completed, whereas the old school 7D-route required massive amounts of human work.

W

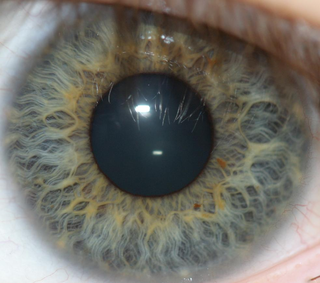

WIris recognition is an automated method of biometric identification that uses mathematical pattern-recognition techniques on video images of one or both of the irises of an individual's eyes, whose complex patterns are unique, stable, and can be seen from some distance. The discriminating powers of all biometric technologies depend on the amount of entropy they are able to encode and use in matching. Iris recognition is exceptional in this regard, enabling the avoidance of "collisions" even in cross-comparisons across massive populations. Its major limitation is that image acquisition from distances greater than a meter or two, or without cooperation, can be very difficult.

W

WMachine vision (MV) is the technology and methods used to provide imaging-based automatic inspection and analysis for such applications as automatic inspection, process control, and robot guidance, usually in industry. Machine vision refers to many technologies, software and hardware products, integrated systems, actions, methods and expertise. Machine vision as a systems engineering discipline can be considered distinct from computer vision, a form of computer science. It attempts to integrate existing technologies in new ways and apply them to solve real world problems. The term is the prevalent one for these functions in industrial automation environments but is also used for these functions in other environments such as security and vehicle guidance.

W

WMobile mapping is the process of collecting geospatial data from a mobile vehicle, typically fitted with a range of GNSS, photographic, radar, laser, LiDAR or any number of remote sensing systems. Such systems are composed of an integrated array of time synchronised navigation sensors and imaging sensors mounted on a mobile platform. The primary output from such systems include GIS data, digital maps, and georeferenced images and video.

W

WMorphing is a special effect in motion pictures and animations that changes one image or shape into another through a seamless transition. Traditionally such a depiction would be achieved through dissolving techniques on film. Since the early 1990s, this has been replaced by computer software to create more realistic transitions. A similar method is applied to audio recordings in similar fashion, for example, by changing voices or vocal lines.

W

WIn computer vision, object co-segmentation is a special case of image segmentation, which is defined as jointly segmenting semantically similar objects in multiple images or video frames.

W

WObject detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class in digital images and videos. Well-researched domains of object detection include face detection and pedestrian detection. Object detection has applications in many areas of computer vision, including image retrieval and video surveillance.

W

WOptical braille recognition is the act of capturing and processing images of braille characters into natural language characters. It is used to convert braille documents for people who cannot read them into text, and for preservation and reproduction of the documents.

W

WOptical character recognition or optical character reader (OCR) is the electronic or mechanical conversion of images of typed, handwritten or printed text into machine-encoded text, whether from a scanned document, a photo of a document, a scene-photo or from subtitle text superimposed on an image.

W

WPedestrian detection is an essential and significant task in any intelligent video surveillance system, as it provides the fundamental information for semantic understanding of the video footages. It has an obvious extension to automotive applications due to the potential for improving safety systems. Many car manufacturers offer this as an ADAS option in 2017.

W

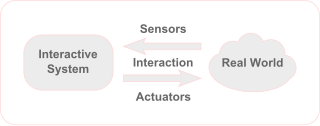

WPhysical computing involves interactive systems that can sense and respond to the world around them. While this definition is broad enough to encompass systems such as smart automotive traffic control systems or factory automation processes, it is not commonly used to describe them. In a broader sense, physical computing is a creative framework for understanding human beings' relationship to the digital world. In practical use, the term most often describes handmade art, design or DIY hobby projects that use sensors and microcontrollers to translate analog input to a software system, and/or control electro-mechanical devices such as motors, servos, lighting or other hardware.

W

WA red light camera is a type of traffic enforcement camera that captures image of vehicle that has entered an intersection in spite of the traffic signal indicating red. By automatically photographing vehicles that run red lights, the photo is evidence that assists authorities in their enforcement of traffic laws. Generally the camera is triggered when a vehicle enters the intersection after the traffic signal has turned red.

W

WReverse image search is a content-based image retrieval (CBIR) query technique that involves providing the CBIR system with a sample image that it will then base its search upon; in terms of information retrieval, the sample image is what formulates a search query. In particular, reverse image search is characterized by a lack of search terms. This effectively removes the need for a user to guess at keywords or terms that may or may not return a correct result. Reverse image search also allows users to discover content that is related to a specific sample image, popularity of an image, and discover manipulated versions and derivative works.

W

WA smart camera or intelligent camera is a machine vision system which, in addition to image capture circuitry, is capable of extracting application-specific information from the captured images, along with generating event descriptions or making decisions that are used in an intelligent and automated system. A smart camera is a self-contained, standalone vision system with built-in image sensor in the housing of an industrial video camera. It contains all necessary communication interfaces, e.g. Ethernet, as well as industry-proof 24V I/O lines for connection to a PLC, actuators, relays or pneumatic valves. It is not necessarily larger than an industrial or surveillance camera. A capability in machine vision generally means a degree of development such that these capabilities are ready for use on individual applications. This architecture has the advantage of a more compact volume compared to PC-based vision systems and often achieves lower cost, at the expense of a somewhat simpler user interface. Less powerful versions are often referred to as smart sensors.

W

WStyleGAN is a novel generative adversarial network (GAN) introduced by Nvidia researchers in December 2018, and made source available in February 2019.

W

WA traffic enforcement camera is a camera which may be mounted beside or over a road or installed in an enforcement vehicle to detect motoring offenses, including speeding, vehicles going through a red traffic light, vehicles going through a toll booth without paying, unauthorized use of a bus lane, or for recording vehicles inside a congestion charge area. It may be linked to an automated ticketing system.

W

WTraffic-sign recognition (TSR) is a technology by which a vehicle is able to recognize the traffic signs put on the road e.g. "speed limit" or "children" or "turn ahead". This is part of the features collectively called ADAS. The technology is being developed by a variety of automotive suppliers. It uses image processing techniques to detect the traffic signs. The detection methods can be generally divided into color based, shape based and learning based methods.

W

WVirtual reality applications are applications that make use of virtual reality (VR), an immersive sensory experience that digitally simulates a virtual environment. Applications have been developed in a variety of domains, such as education, architectural and urban design, digital marketing and activism, engineering and robotics, entertainment, fine arts, healthcare and clinical therapies, heritage and archaeology, occupational safety, social science and psychology.

W

WVisual temporal attention is a special case of visual attention that involves directing attention to specific instant of time. Similar to its spatial counterpart visual spatial attention, these attention modules have been widely implemented in video analytics in computer vision to provide enhanced performance and human interpretable explanation of deep learning models.

W

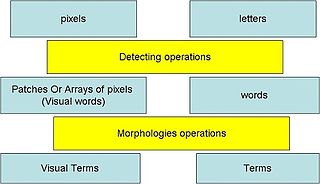

WVisual words, as used in image retrieval systems, refer to small parts of an image which carry some kind of information related to the features, or changes occurring in the pixels such as the filtering, low-level feature descriptors.

W

WIn virtual reality (VR), positional tracking detects the precise position of the head-mounted displays, controllers, other objects or body parts within Euclidean space. Because the purpose of VR is to emulate perceptions of reality, it is paramount that positional tracking be both accurate and precise so as not to break the illusion of three-dimensional space. Several methods of tracking the position and orientation of the display and any associated objects or devices have been developed to achieve this. All of said methods utilize sensors which repeatedly record signals from transmitters on or near the tracked object(s), and then send that data to the computer in order to maintain an approximation of their physical locations. By and large, these physical locations are identified and defined using one or more of three coordinate systems: the Cartesian rectilinear system, the spherical polar system, and the cylindrical system. Many interfaces have also been designed to monitor and control one’s movement within and interaction with the virtual 3D space; such interfaces must work closely with positional tracking systems to provide a seamless user experience.

W

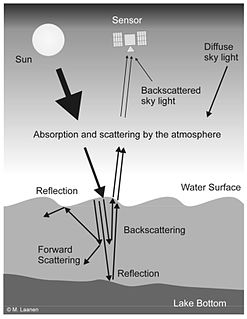

WWater Remote Sensing studies the color of water through the observation of the spectrum of water leaving radiation. From the study of this spectrum, the concentration of optically active components of the upper layer of the water body can be concluded via specific algorithms. Water quality monitoring by remote sensing and close-range instruments has obtained considerable attention since the founding of EU Water Framework Directive.