W

WAikuma is an Android App for collecting speech recordings with time-aligned translations. The app includes a text-free interface for consecutive interpretation, designed for users who are not literate. The Aikuma won Grand Prize in the Open Source Software World Challenge (2013).

W

WArabic Ontology is a linguistic ontology for the Arabic language, which can be used as an Arabic Wordnet with ontologically-clean content. People use it also as a tree of the concepts/meanings of the Arabic terms. It is a formal representation of the concepts that the Arabic terms convey, and its content is ontologically well-founded, and benchmarked to scientific advances and rigorous knowledge sources rather than to speakers’ naïve beliefs as wordnets typically do . The Ontology tree can be explored online.

W

WThe Association for Computational Linguistics (ACL) is the international scientific and professional society for people working on problems involving natural language and computation. An annual meeting is held each summer in locations where significant computational linguistics research is carried out. It was founded in 1962, originally named the Association for Machine Translation and Computational Linguistics (AMTCL). It became the ACL in 1968.

W

WThe Australasian Language Technology Association (ALTA) promotes language technology research and development in Australia and New Zealand. ALTA organises regular events for the exchange of research results and for academic and industrial training, and co-ordinates activities with other professional societies. ALTA is a founding regional organization of the Asian Federation of Natural Language Processing (AFNLP).

W

WBabelfy is a software algorithm for the disambiguation of text written in any language. Specifically, Babelfy performs the tasks of multilingual Word Sense Disambiguation and Entity Linking. Babelfy is based on the BabelNet multilingual semantic network and performs disambiguation and entity linking in three steps:It associates with each vertex of the BabelNet semantic network, i.e., either concept or named entity, a semantic signature, that is, a set of related vertices. This is a preliminary step which needs to be performed only once, independently of the input text. Given an input text, it extracts all the linkable fragments from this text and, for each of them, lists the possible meanings according to the semantic network. It creates a graph-based semantic interpretation of the whole text by linking the candidate meanings of the extracted fragments using the previously-computed semantic signatures. It then extracts a dense subgraph of this representation and selects the best candidate meaning for each fragment.

W

WBabelNet is a multilingual lexicalized semantic network and ontology developed at the NLP group of the Sapienza University of Rome. BabelNet was automatically created by linking Wikipedia to the most popular computational lexicon of the English language, WordNet. The integration is done using an automatic mapping and by filling in lexical gaps in resource-poor languages by using statistical machine translation. The result is an encyclopedic dictionary that provides concepts and named entities lexicalized in many languages and connected with large amounts of semantic relations. Additional lexicalizations and definitions are added by linking to free-license wordnets, OmegaWiki, the English Wiktionary, Wikidata, FrameNet, VerbNet and others. Similarly to WordNet, BabelNet groups words in different languages into sets of synonyms, called Babel synsets. For each Babel synset, BabelNet provides short definitions in many languages harvested from both WordNet and Wikipedia.

W

WCo-occurrence networks are generally used to provide a graphic visualization of potential relationships between people, organizations, concepts, biological organisms like bacteria or other entities represented within written material. The generation and visualization of co-occurrence networks has become practical with the advent of electronically stored text compliant to text mining.

W

WComputational Linguistics is a quarterly peer-reviewed open-access academic journal in the field of computational linguistics. It is published by MIT Press for the Association for Computational Linguistics (ACL). The journal includes articles, squibs and book reviews. It was established as the American Journal of Computational Linguistics in 1974 by David Hays and was originally published only on microfiche until 1978. George Heidorn transformed it into a print journal in 1980, with quarterly publication. In 1984 the journal obtained its current title. It has been open-access since 2009.

W

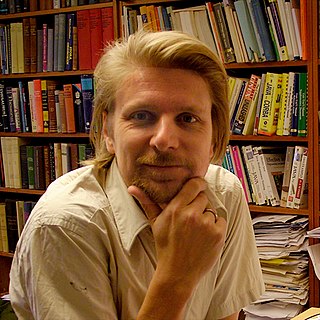

WMichel Frederic DeGraff is a Haitian creolist who has served on the board of the Journal of Haitian Studies. He is a tenured professor at the Massachusetts Institute of Technology and a founding member of the Haitian Creole Academy. His field of scholarship is Creole language, also known as Lang Kreyòl Linguistics. He is known for his advocacy towards the recognition of Haitian Creole as a full-fledged language. In the fall of 2012, he received a $1 million grant from the National Science Foundation to introduce online Creole language materials in the teaching of STEM in Haiti. He believes that Haitian children should be taught in their native language at all levels of instruction, contrary to the tradition of teaching them in French. Degraff believes that instruction in French, a foreign language for most Haitian children, hinders their creativity and their ability to excel.

W

WIn computational linguistics, FrameNet is a project housed at the International Computer Science Institute in Berkeley, California which produces an electronic resource based on a theory of meaning called frame semantics. FrameNet reveals for example that the sentence "John sold a car to Mary" essentially describes the same basic situation as "Mary bought a car from John", just from a different perspective. A semantic frame can be thought of as a conceptual structure describing an event, relation, or object and the participants in it. The FrameNet lexical database contains over 1,200 semantic frames, 13,000 lexical units and 202,000 example sentences. FrameNet is largely the creation of Charles J. Fillmore, who developed the theory of frame semantics that the project is based on, and was initially the project leader when the project began in 1997. Collin Baker became the project manager in 2000. The FrameNet project has been influential in both linguistics and natural language processing, where it led to the task of automatic Semantic Role Labeling.

W

WGenerative Pre-trained Transformer 2 (GPT-2) is an open-source artificial intelligence created by OpenAI in February 2019. A transformer machine learning model, GPT-2 uses deep learning to translate text, answer questions, summarize passages, and generate human-readable text output on a level that is often indistinguishable from that of humans. It is a general-purpose learner; it was not specifically trained to do any of these tasks, and its ability to perform them is an extension of its general ability to accurately synthesize the next item in an arbitrary sequence.

W

WIn linguistics, Heaps' law is an empirical law which describes the number of distinct words in a document as a function of the document length. It can be formulated as

W

WiGlue is an experimental database with detailed search options, containing entities and information editing tool. It organizes interrelated images, videos, individuals, institutions, objects, websites, geographical locations into cohesive data structures.

W

WInterlingual machine translation is one of the classic approaches to machine translation. In this approach, the source language, i.e. the text to be translated is transformed into an interlingua, i.e., an abstract language-independent representation. The target language is then generated from the interlingua. Within the rule-based machine translation paradigm, the interlingual approach is an alternative to the direct approach and the transfer approach.

W

WIntraText is a digital library that offers an interface while meeting formal requirements. Texts are displayed in a hypertextual way, based on a Tablet PC interface. By linking words in the text, it provides Concordances, word lists, statistics and links to cited works. Most content is available under a Creative Commons license It also offers publishing services that enable similar advantages.

W

WJussi Karlgren is a Swedish computational linguist, adjunct professor at KTH, and co-founder of text analytics company Gavagai AB. He holds a PhD in computational linguistics from Stockholm University, and the title of docent of language technology at Helsinki University.

W

WLIVAC is an uncommon language corpus dynamically maintained since 1995. Different from other existing corpora, LIVAC has adopted a rigorous and regular as well as "Windows" approach in processing and filtering massive media texts from representative Chinese speech communities such as Hong Kong, Macau, Taipei, Singapore, Shanghai, Beijing, as well as Guangzhou, and Shenzhen. The contents are thus deliberately repetitive in most cases, represented by textual samples drawn from editorials, local and international news, cross-Formosan Straits news, as well as news on finance, sports and entertainment. By 2019, 2.7 billion characters of news media texts have been filtered so far, of which 680 million characters have been processed and analyzed and have yielded an expanding Pan-Chinese dictionary of 2.3 million words from the Pan-Chinese printed media. Through rigorous analysis based on computational linguistic methodology, LIVAC has at the same time accumulated a large amount of accurate and meaningful statistical data on the Chinese language and their speech communities in the Pan-Chinese region, and the results show considerable and important variations.

W

WMeaningCloud is a Software as a Service product that enables users to embed text analytics and semantic processing in any application or system. It was previously branded as Textalytics.

W

WMUSA was an early prototype of Speech Synthesis machine started in 1975.

W

WIn the fields of computational linguistics and probability, an n-gram is a contiguous sequence of n items from a given sample of text or speech. The items can be phonemes, syllables, letters, words or base pairs according to the application. The n-grams typically are collected from a text or speech corpus. When the items are words, n-grams may also be called shingles.

W

WNatural language processing (NLP) is a subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The result is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves.

W

WOptical braille recognition is the act of capturing and processing images of braille characters into natural language characters. It is used to convert braille documents for people who cannot read them into text, and for preservation and reproduction of the documents.

W

WOptical character recognition or optical character reader (OCR) is the electronic or mechanical conversion of images of typed, handwritten or printed text into machine-encoded text, whether from a scanned document, a photo of a document, a scene-photo or from subtitle text superimposed on an image.

W

WPheme is a 36-month research project begun in 2014 into establishing the veracity of claims made on the internet.

W

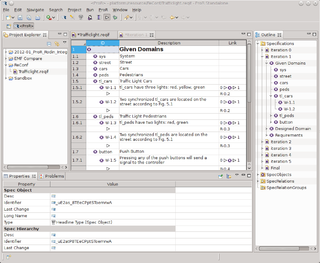

WThe Requirements Modeling Framework (RMF) is an open-source software framework for working with requirements based on the ReqIF standard. RMF consists of a core allowing reading, writing and manipulating ReqIF data, and a user interface allowing to inspect and edit request data.

W

WResearch on Language and Computation was a quarterly peer-reviewed academic journal covering research in computational linguistics and natural language processing. It was established in 2003 and ceased publication in December 2010. The journal was published by Springer Science+Business Media.

W

WSpeech-generating devices (SGDs), also known as voice output communication aids, are electronic augmentative and alternative communication (AAC) systems used to supplement or replace speech or writing for individuals with severe speech impairments, enabling them to verbally communicate. SGDs are important for people who have limited means of interacting verbally, as they allow individuals to become active participants in communication interactions. They are particularly helpful for patients suffering from amyotrophic lateral sclerosis (ALS) but recently have been used for children with predicted speech deficiencies.

W

WSubvocal recognition (SVR) is the process of taking subvocalization and converting the detected results to a digital output, aural or text-based.

W

WTatoeba is a free collaborative online database of example sentences geared towards foreign language learners. Its name comes from the Japanese term "tatoeba" (例えば), meaning "for example". Unlike other online dictionaries, which focus on words, Tatoeba focuses on translation of complete sentences. In addition, the structure of the database and interface emphasize one-to-many relationships. Not only can a sentence have multiple translations within a single language, but its translations into all languages are readily visible, as are indirect translations that involve a chain of stepwise links from one language to another.

W

WText Nailing (TN) is an information extraction method of semi-automatically extracting structured information from unstructured documents. The method allows a human to interactively review small blobs of text out of a large collection of documents, to identify potentially informative expressions. The identified expressions can be used then to enhance computational methods that rely on text as well as advanced natural language processing (NLP) techniques. TN combines two concepts: 1) human-interaction with narrative text to identify highly prevalent non-negated expressions, and 2) conversion of all expressions and notes into non-negated alphabetical-only representations to create homogeneous representations.

W

WIn linguistics, a treebank is a parsed text corpus that annotates syntactic or semantic sentence structure. The construction of parsed corpora in the early 1990s revolutionized computational linguistics, which benefitted from large-scale empirical data. The exploitation of treebank data has been important ever since the first large-scale treebank, The Penn Treebank, was published. However, although originating in computational linguistics, the value of treebanks is becoming more widely appreciated in linguistics research as a whole. For example, annotated treebank data has been crucial in syntactic research to test linguistic theories of sentence structure against large quantities of naturally occurring examples.

W

WVoice computing is the discipline that develops hardware or software to process voice inputs.

W

WWordNet is a lexical database of semantic relations between words in more than 200 languages. WordNet links words into semantic relations including synonyms, hyponyms, and meronyms. The synonyms are grouped into synsets with short definitions and usage examples. WordNet can thus be seen as a combination and extension of a dictionary and thesaurus. While it is accessible to human users via a web browser, its primary use is in automatic text analysis and artificial intelligence applications. WordNet was first created in the English language and the English WordNet database and software tools have been released under a BSD style license and are freely available for download from that WordNet website.

W

WIn probability theory and statistics, the zeta distribution is a discrete probability distribution. If X is a zeta-distributed random variable with parameter s, then the probability that X takes the integer value k is given by the probability mass function

W

WZipf's law is an empirical law formulated using mathematical statistics that refers to the fact that many types of data studied in the physical and social sciences can be approximated with a Zipfian distribution, one of a family of related discrete power law probability distributions. Zipf distribution is related to the zeta distribution, but is not identical.